Google provides some very interesting information about domain rankings in the Google Search Console but there are fundamental differences to the data we deliver in the Toolbox. In this article we detail the differences.

- There's no 'best' when there's no benchmark.

- Differences in data collection.

- Good weather leads to alternative data.

- No Comparison of Mobile and Desktop Rankings

- No reliable Ranking Distribution.

- Incorrect view of Problems and Opportunities

- Lost Rankings, or No Searcher?

- Data consolidation destroys transparency.

- Keyword totals are not the same

- Average positions can be confusing

- Data is delayed before being delivered.

- Search Console data not complete

- Background: The Origins of the Google Search Console

In August 2015 we were the first provider to integrate the new Google Search Console API (then known as Google Webmaster Tools) into the SISTRIX Toolbox for our customers. Because both the data collection method and the objectives of Google Search Console differ from the SISTRIX Toolbox there are often questions relating to the comparability of the data sources. In this article we answer the questions.

There’s no ‘best’ when there’s no benchmark.

Best performance is often only discovered through direct comparison with competitors. It was no coincidence that the 10-second barrier for 100 meters was broken 3 times on the ‘night of speed‘, after one runner proved it was actually possible.

Competitor comparison is one of the core features of the Toolbox. We show you how good you are, in comparison with your competitors which allows you to truly benchmark your performance. Are you doing especially well, or are you under-performing?

This kind of data is completely missing from Google Search Console. You’ll see, as a result of historical development, data from the domain but no environment in which to compare and benchmark the data. Your click rate might be going up, but is your competitors click-rate going up faster?

Differences in data collection.

The data collection method for Google Search Console and SISTRIX are fundamentally different. Google only takes a measurement when the results page is used. If a results page is not used during the period, no data is collected.

For the Toolbox we don’t rely on user actions to trigger a data point. We continuously and reliably collect the rankings ourselves and this difference shows itself in many of the following points.

Good weather leads to alternative data.

Because of the different data collection method, any changes in demand (that is the number and quality of searches) will directly impact the Search Console results. If the weather that week is especially good, for example, then you can expect less search activity. In the search results themselves, however, there are rarely any changes.

Apart from the weather there are other events that can impact search activity. Season, school holidays, news events, sports events, advertising campaigns, and many other external factors can have an impact on the measurements. Structurally, the Search Console is nearer to an analytics tool like Google Analytics than a specific SEO measurement tool like the Toolbox

An analysis of cause and effect is essential in a complex environment such as SEO so the numerous external influences on the Search Console data make this analysis more difficult, or even impossible.

No Comparison of Mobile and Desktop Rankings

In most countries, the number of searches made on smartphones has overtaken the number of searches done via desktop so a reliable comparison is important. Googles move to a mobile first index also underpins the importance of this comparison in any future-proof SEO strategy.

Google Search Console does not enable a valid comparison between mobile and desktop data because of the significantly different user behaviour. For example, a smartphone user might click on the second or third page of results less frequently than desktop users (still) do. Since the GSC data is based on this user behaviour it simply can not serve as a basis for comparison.

In the Toolbox we ensure that desktop and mobile SERPs can be compared with confidence.

No reliable Ranking Distribution.

The ranking distribution is an elementary basis for the evaluation of content-formats. Only content formats that have above-average representation on the first Google results page are worth further development.

Because Search Console data is only collected when a searcher accesses the corresponding search page, the ranking distribution based on the Search Console data can not be correctly determined. The are simply too few users accessing the second, third or tenth page of results to provide a reliable evaluation.

An example of grave misjudgement resulting from the use of Search Console data is shown in this blog post from September 2015.

Incorrect view of Problems and Opportunities

The principal is that Google only measures the keywords that are already ranking on the first page, but not the possibly interesting keywords that rank lower down. If you’re not lucky enough to have a user click on the 9th page of search results, you’re not going to see the rankings in the Search Console.

Lost Rankings, or No Searcher?

The same principle affects keywords that have ranked previously but then aren’t ranking any more. Was it because the keyword didn’t rank at all, or was it because no-one viewed the relevant page of search results? When making the comparison it’s impossible to say whether the page was not represented in the search results or that simple no-one was viewing the search results.

This important evaluation is possible with the Toolbox. We ensure that when comparing dates, the data has comparable content and scope.

Data consolidation destroys transparency.

Google has been consolidating Search Console data since 2019 based on the canonical URL given in an HTML page. With that, Google is creating an abstraction layer over the data making it unclear which URL was actually displayed in the search results. For larger websites this can be error-prone. As a result of this consolidation, configuration errors, redirects, AMP conversions and other cases can no longer be uniquely tracked.

Keyword totals are not the same

The Search Console API counts (and delivers) keywords as a combination of keyword / term, URL, device and country. The term “sistrix” alone is delivered as 130 different combinations as you can see in this screenshot. In the Toolbox we only count each keyword once. In this, of course extreme, example, the GSC numbers take up 130 lines while in the Sistrix keyword table, only one line (the best ranking for the selected device and country) is listed. A comparison of the numbers is impossible.

Average positions can be confusing

The position information in the Search Console often has decimal places. So it is possible to achieve an average position of 2.6 for a keyword. These decimal-place are based on Google’s method of counting the results.

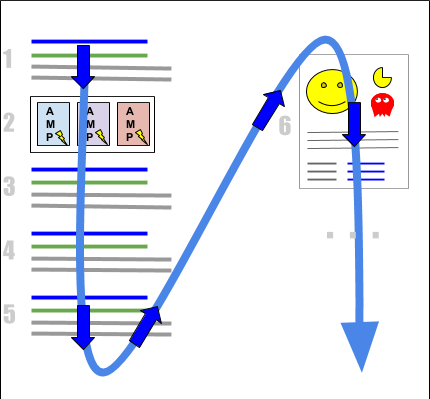

Google counts the results that contain a link to the target page from top to bottom and then continues with other search result elements, such as knowledge graph overlays:

This counting method may be understandable from the perspective of a search engine, but from the perspective of a website operator, this method can lead to incorrect assumptions.

If your page ranks in organic position 1 and there is only one knowledge panel in the results as an additional element in which the website also appears, Google would display the placement with the average position 6 ((pos 1 + pos 11) / 2).

Data is delayed before being delivered.

It takes Google a few days between Googles measurement and the availability of the data in the Search Console which means you’re not working with the most current data. In addition there are often problems with the tool itself. Simply put, GSC is not a core product but a necessary evil that helps Google avoid additional regulation.

Search Console data not complete

Historically seen, Search Console is the successor to the data derived from referrer-keyword information. However, it’s now filtered by Google for privacy reasons. Unfortunately there is no information available from Google on the scope, extent and background to the possible filtering of keywords. It is also unknown whether the filtering changes over time. Data in the Search Console is, compared to the old solution which used the referrer-string, not the same. Data is missing and the rules are unclear.

Background: The Origins of the Google Search Console

In order to understand why Google offers the data from the Search Console in the current form, knowledge of the development of Search Console (formerly Google Webmaster Tools) is helpful.

For a long time, the results of Google search were completely unencrypted (i.e. used without HTTPS) and as a result, every webmaster could use the referrer-field to determine the keyword that was used to complete the visit to the site.

With the introduction of SSL encryption in late 2011 the situation changed. From then on, an increasing proportion of searches were encrypted and as a result, Google stopped submitting the keyword information to website operators. Instead of showing a keyword, the logs were only showing “Not Provided”.

The basis for the change was that if people were searching through an encrypted channel, the keyword information should also be treated as confidential and not exposed to third parties.

In order not to leave website operators completely in the dark, the current performance report was introduced into the Google Search Console. After filtering and limiting Google’s keyword data the webmaster is able to view keyword information again.

The Search Console is, from Google’s perspective, the successor to the referrer field seen in web server log files and functions as more of an extension to Google Analytics rather than SEO software.