Modern websites are made up of HTML, CSS – and JavaScript. For years, one SEO piece of advice was to make your website work even if JavaScript was disabled. Since that time, Googlebot has learned to support JavaScript in such a way that the aformentioned advice is now only true in a limited capacity. Experiments, such as the one by Bartosz Góralewicz, show that nowadays Google supports many functions available in the most common JavaScript-frameworks.

This makes it a good time to teach our Optimizer to crawl pages that are based on JavaScript. From today on, you can enable this (still experimental) function within the crawl settings for your Optimizer project.

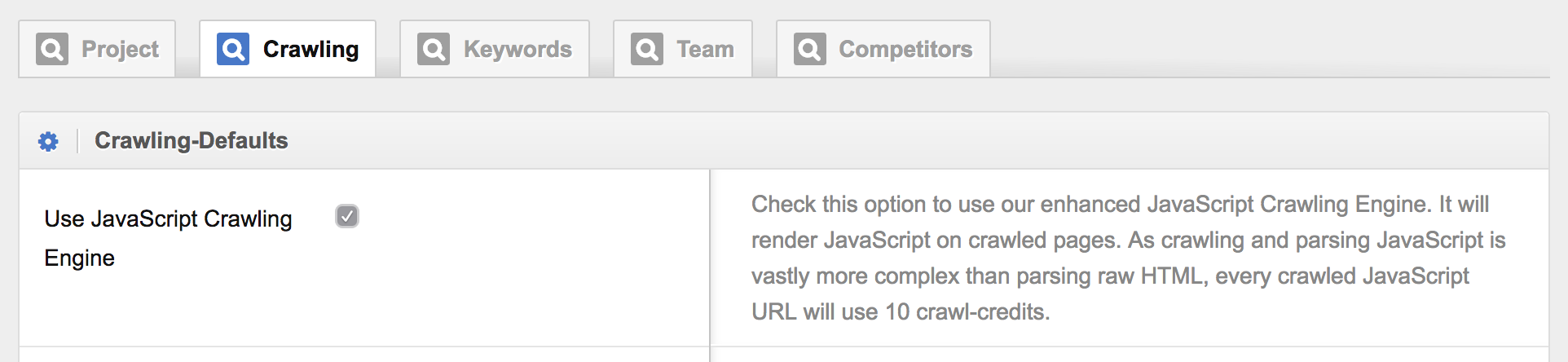

Activate JavaScript crawling within the project settings.

Evaluating JavaScript is much more complex than evaluating pure HTML-pages. Because of this, the JavaScript-crawling within the Optimizer will use up 10 crawl-credits per page – and not just one, as does the HTML-parsing. Seeing how you already have 500.000 crawl-credits within your basic Optimizer account, there is nothing to keep you from evaluating even vast and comprehensive JavaScript websites.