Like robots meta tags, X-Robots-Tags are used to manage the crawling and indexing of pages. But they have some key differences: X-Robots-Tags are provided in the http protocol response headers (not the html) and are therefore not limited to HTML files.

Do you want some of your webpages to stop appearing in search results? You can achieve this through the use of robots meta tags. But what do you do if you want a page to be indexed, but not the image on it? In this case, and in a few other cases, the X-Robots-Tag is ideal.

How to use the X-Robots-Tag

The X-Robots-Tag is an HTTP protocol header. For example, a response with an X-Robots-Tag might look like this:

HTTP/1.1 200 OK

Date: Wed, 13 May 2020 19:30:25 GMT

(...)

X-Robots tag: noindex

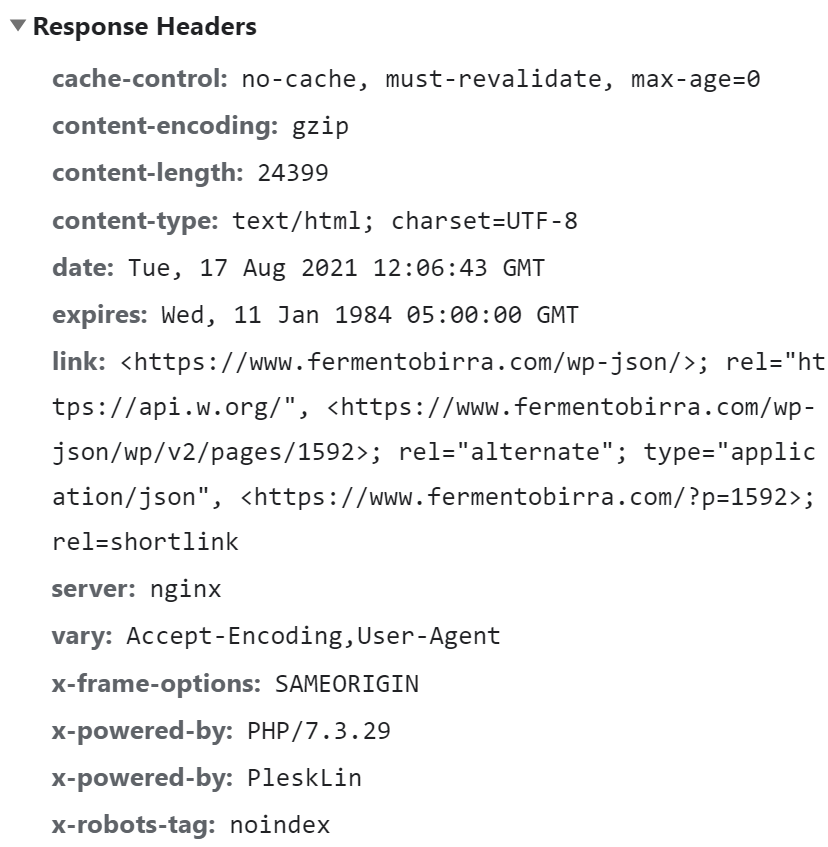

(...)This can be seen in Chrome developer tools when viewing the http response header. Here’s an example with the x-robots-tag at the bottom:

As with robots meta tags, you have the option of combining several headers with one another. According to Google, the values are the same in both cases. However, the exact configuration depends on the web server and what is not to be indexed.

With the most popular type of server, Apache, it is best to use httpd.conf and .htaccess files to set X-Robots-Tags. For example, if you want to keep all PNG and GIF files on a page from being indexed, enter the following code in the .htaccess or httpd.conf root file of the website in Apache:

<Files ~ "\.(png|gif)$">

Header set X-Robots tag "noindex"

</Files>The FIles and FilesMatch options allow regular expressions to be used to match large numbers of files in a single line of code.

When X-Robots Tags are the right choice

X-Robots-Tags should only be used by advanced users, as mistakes can have serious consequences for your web pages. That’s why we also recommend that you make a backup beforehand, just to stay on the safe side.

Why should you use X-Robots-Tags at all? They are often the right choice despite their complicated application, especially in the following situations:

- You want to provide specific instructions for non-HTML files like images or PDFs

- You want to deindex a large number of pages with certain parameters or a complete subdomain

In the first case, X-Robots-Tags are the only option; in the second, they save time and effort compared to the laborious implementation of individual Robots meta tags.