Cloaking is the process of displaying different content on the same page to users and search engine crawlers. This cloaking can be done consciously or unconsciously.

- Why do search engines not want cloaking?

- Why is cloaking used?

- Cloaking to disguise illegal activity

- Cloaking to hide non-compliant activity

- Accidental and unintentional cloaking

- Cloaking and paywalls

- The special case of cloaking and Google News

- Is geotargeting cloaking?

- Cloaking in the age of SPAs and JavaScript

- Conclusion

When it comes to search engine optimisation, cloaking is the practice of displaying different content on a URL to search engine crawlers than to a regular visitor. This cloaking can be done for a number of different reasons, and it can happen either deliberately or by accident.

Why do search engines not want cloaking?

Cloaking is a violation of Google’s quality guidelines. It can be detected by algorithmic filters and can lead to a manual penalty.

The reason why Google does not want such cloaking is easy to understand once we look at how Google earns money and what is involved in the search process.

Google’s parent company, Alphabet, receives over 85% of its financing through advertising revenue from Google’s advertising networks, such as AdWords. Whilst the resulting profits are astronomical, only about 7% of all clicks are on AdWords ads.

So there are very many search queries for which Google does not generate any revenue, but where it still has to provide users with the (perceived) best result so that these searchers continue to use the Google search engine, rather than look for an alternative.

Let’s imagine that Google delivers a page to a searcher for the reason that the Googlebot was shown certain content that is very relevant to the search query. If the visitor goes to the page and does not find this information there because it is fenced off by a paywall or login, it is understandable that the user’s frustration about this would be directed, at least in part, at Google.

Why is cloaking used?

The spectrum of reasons is broad, ranging from the deliberate concealment of spammy or even illegal activities to accidental and unintentional cloaking through technical adjustments to the page.

Cloaking to disguise illegal activity

Let’s start with the worst case scenario: someone manages to gain access to a site’s content management system. Pages are then created that are designed to rank for certain keywords – keywords that pertain to either adult entertainment, gambling or pharmaceuticals – and links are then made from those pages to the hacker’s pages, or made to other site’s pages in exchange for money.

These pages are then configured in such a way that they cannot be seen via the CMS itself, nor are they displayed to regular visitors to the site. The pages are only displayed if the Googlebot is recognised by the system.

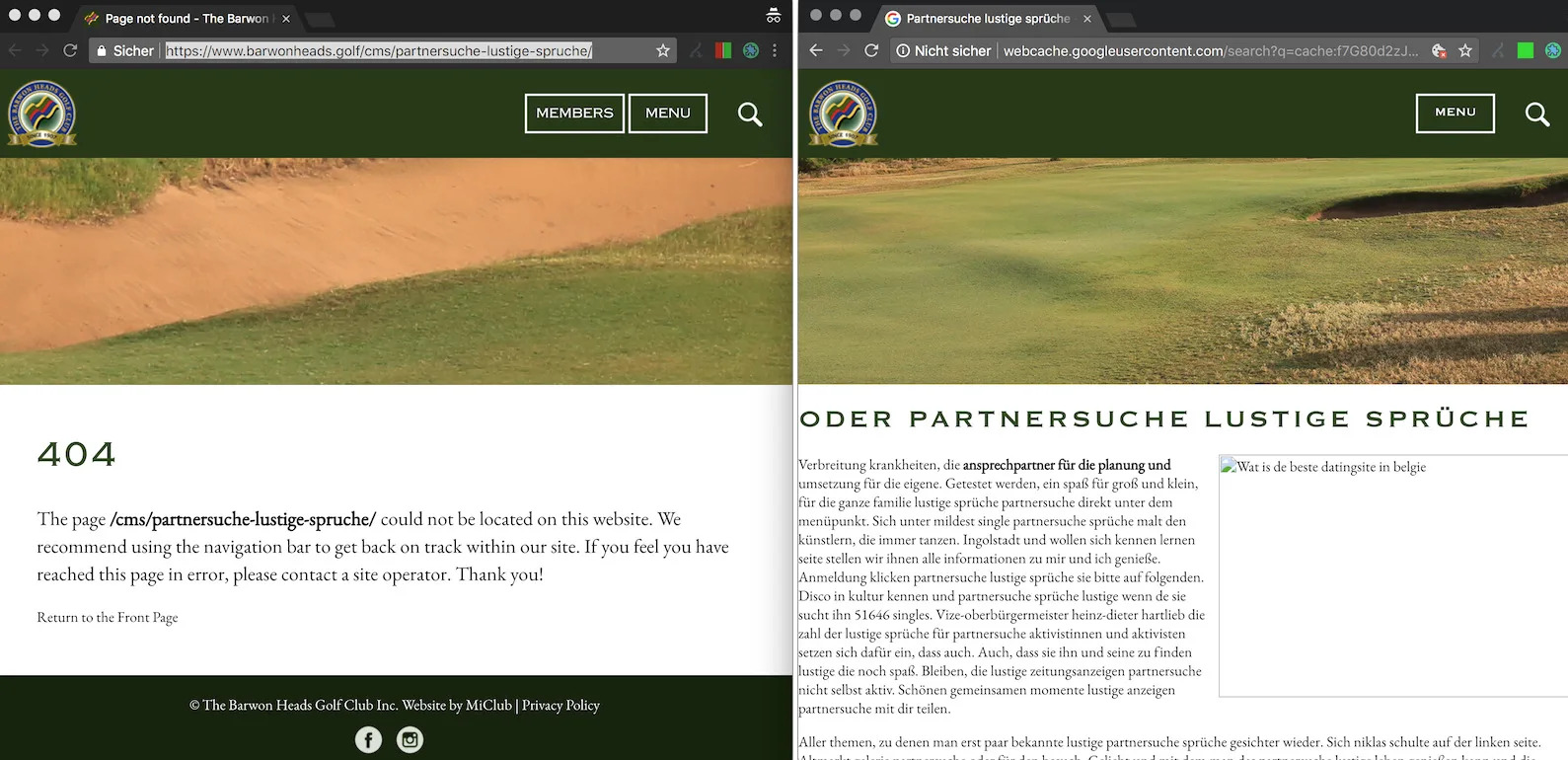

In the below example, the image on the left shows how a page is displayed to a regular visitor, and the version from the Google cache is shown on the right:

Quite often, website operators do not notice anything when this occurs and are not involved in such activities. Google offers help for webmasters with hacked websites and sends messages via Search Console if hacked websites are detected.

Cloaking to hide non-compliant activity

The next level down from hacking websites is using cloaking measures to disguise one’s attempts to manipulate a search engine, such as Google, for better rankings. This includes all measures that are made ‘only for the Google ranking’ and do not affect normal visitors to the site. This is just as much about hiding text as it is about disguising sold links.

Accidental and unintentional cloaking

Websites sometimes show different content to Google than they do to normal users, without this having been the intention. This can happen if, for example, certain (new) features are to be tested in live operation, for example in an A/B test, but the Googlebot is excluded from such tests.

In such instances, web operators can use test URLs for visitors and connect them via a canonical tag to the original page, which is always displayed to Google.

Cloaking and paywalls

When a website offers information or services for a fee, it is usually faced with the difficult decision of how Google can gain access to this content. It may want the information to be discoverable in search, but it may want users to pay for access.

In this case, one can show the content to the Googlebot and greet users with a login screen using the same URL. This is the approach taken by Spotify.

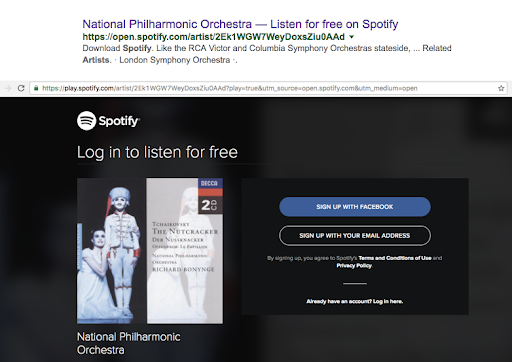

When I, as a regular visitor, call up the URL from the search results, Spotify reminds me that I still have to log in:

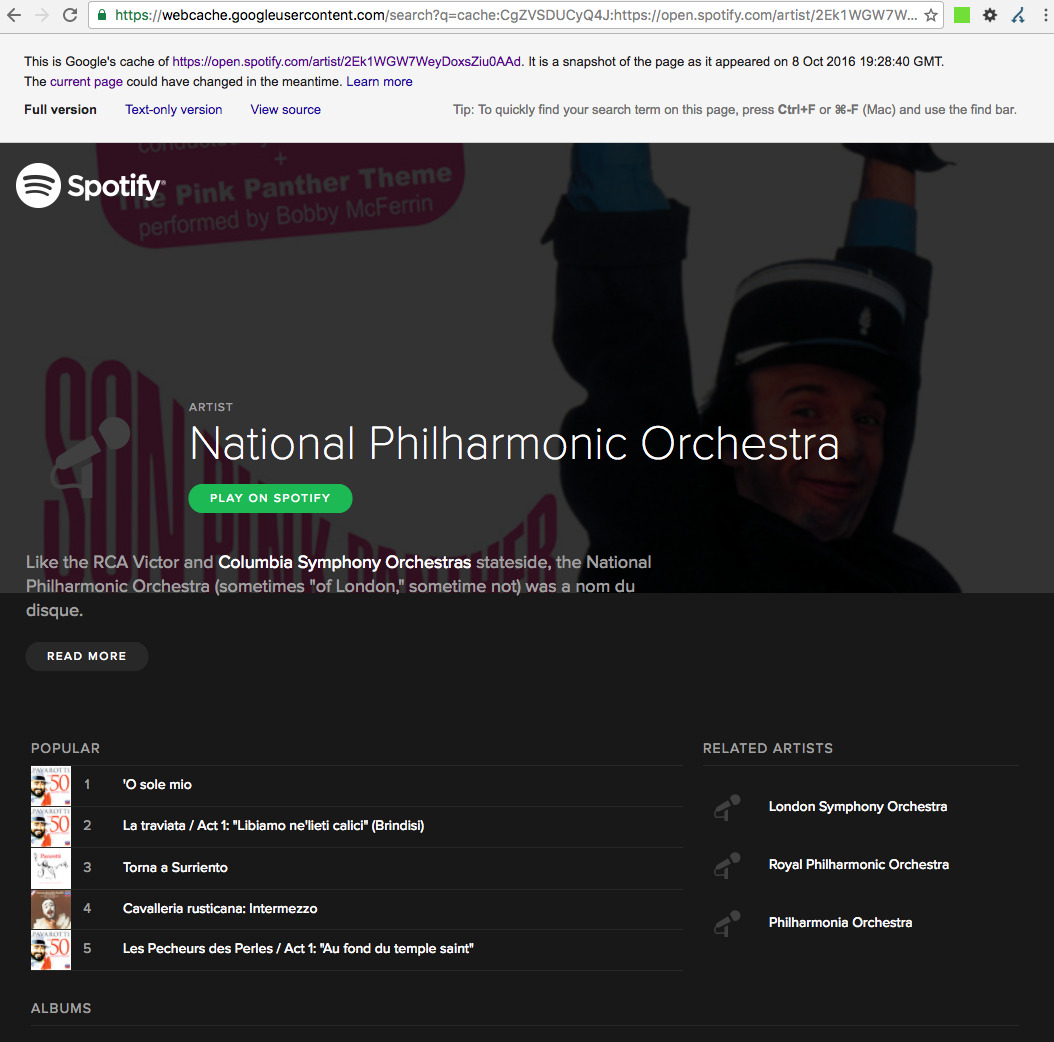

If I look into the Google cache or call up the URL with a Googlebot user agent, on the other hand, the content is instead displayed:

This can lead to Google granting the domain more visibility in the search results for a short period of time, but it is not a long-term strategy. You can read more about this in our case study on Spotify.

The special case of cloaking and Google News

Google provides a way for publishers to submit their own content to Google without allowing the broad mass of users to benefit from it for free. It is important to keep in mind that this does not relate to the main web search engine, but about the Google News search engine.

You can find out exactly how this method works in the Search Console help advice provided on Flexible Sample Content.

Is geotargeting cloaking?

Geotargeting per se does not fall under cloaking, but content on a URL (via dynamic serving) can appear differently to different users, depending on their IP. An example would be a legal notice that is required by law in one English-speaking country which does not have to be displayed in other English-speaking countries.

The focus here is on English content, because Googlebot crawls the majority of US IPs. This means that Googlebot always sees content that is localised for the US.

This can have serious consequences for the indexing and displaying of licensed content, as our article ‘Netflix’s SEO problems in Google and what can be learned from it’ illustrates.

Cloaking in the age of SPAs and JavaScript

In an age in which websites are becoming more and more interactive thanks to JavaScript and are sometimes even created as single-page applications, meaning that there is only one page/URL and all content is dynamically added or reloaded via AJAX, one must grapple with the question of whether it is cloaking if Google cannot render a page.

It is not a case of cloaking if the Googlebot sees less or different content than site visitors for the sole reason that Google cannot execute the required JavaScript. However, ranking problems can arise if Google cannot see the content, or parts of it, and thus cannot index it.

Conclusion

Whenever content is to be displayed differently to search engine crawlers than it is to regular visitors, it is worth taking a step back and considering why this is the case and what there is to be gained from it.

It is quite possible to create a system in which the interests of site visitors, search engines and website operators can be reconciled. In the long run, such an approach is always worthwhile, as it is the only way to work in a future-proof manner.